You know that feeling when someone says “AI” and you picture robots taking over? Let’s set that aside for now. Real AI is less sci-fi and more like spreadsheets meeting intuition. It’s trained on vast amounts of data, but it’s also shaped by human choices.

Not every problem needs a billion-parameter model. Sometimes a simple decision tree does the trick. Other times, you’re stitching together text, images, and sound like a digital DJ. And yeah, that’s where things like transformers or diffusion models come in.

The field’s exploding with jargon, and honestly? Half the terms sound like they were invented during a late-night hackathon. But you don’t need to memorize acronyms to get how this stuff works.

Let’s skip the lecture. Instead, we’ll discuss what really matters: which models solve specific problems and why you’d choose one over another.

Key Takeaways

1. AI model types aren’t interchangeable. A model that writes poetry won’t help you forecast inventory and vice versa.

2. Don’t assume deep learning is “better.” For structured data or small datasets, a random forest or XGBoost often wins. And your ops team will thank you.

3. LLMs? They’re powerful, but if you’re classifying support tickets, you’re using rocket fuel when a bicycle will do.

4. The main issue isn’t algorithms; it’s data quality, latency, and whether you can explain the output to a person.

5. Focus on hybrids like graph networks and neuro-symbolic systems. That’s the future of practical AI.

What Is an AI Model? (Quick Primer)

So, what is an AI model, really?

It’s not a robot brain. It’s not even “intelligence” in the human sense. As computer scientist Michael I. Jordan once put it:

AI is not about mimicking humans. It’s about building systems that can act intelligently based on data.

In practice, an AI model is a mathematical function, often a very complex one that maps inputs to outputs by learning patterns from data. Yoshua Bengio, a pioneer in deep learning, describes it as-

A system that discovers representations from observations, without being explicitly programmed for each task.

It learns from examples, like customer transactions or medical scans. Then it adjusts its settings to make useful predictions or decisions. As Fei-Fei Li has noted,

AI doesn’t ‘know’ anything. It infers.

And that’s the key: models don’t understand. They approximate. They generalize. Sometimes brilliantly. Sometimes in ways that surprise even their creators.

Broad Categories of AI Models (Based on Learning Paradigms)

AI models learn in various ways. This affects their design and use. Researchers group these methods as supervised, unsupervised, reinforcement, or hybrids. Let’s begin with the one most people see first: supervised learning.

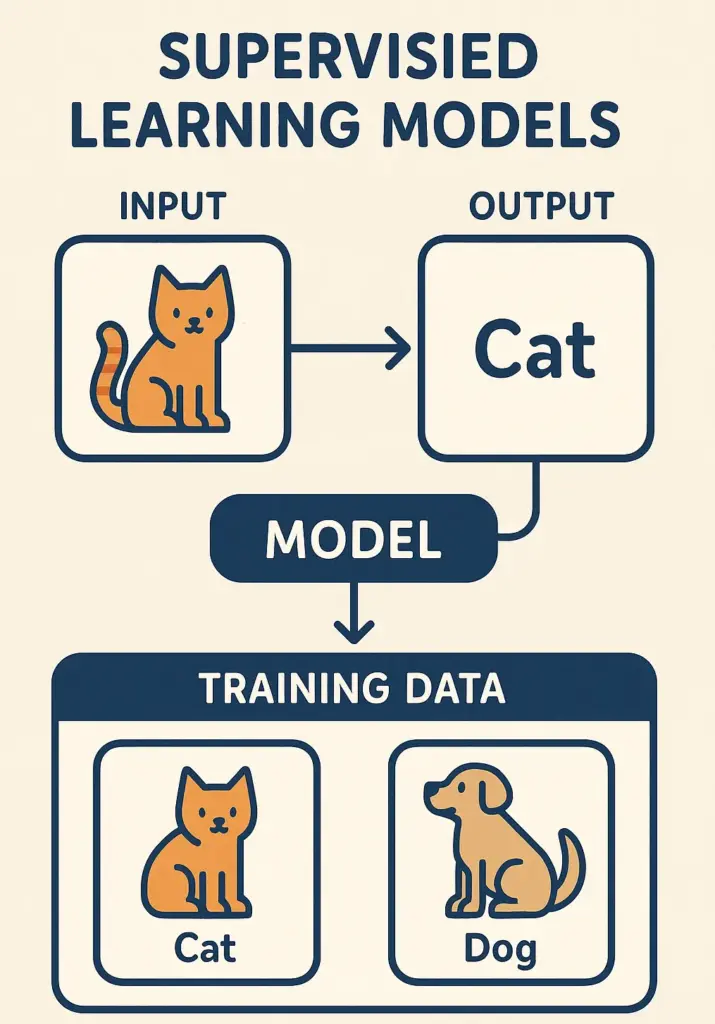

1. Supervised Learning Models

Supervised learning is like having a “teacher” for AI. You provide the model with examples, each labeled with the correct answer. It then learns to make connections between them.

Input: an email.

Label: “spam” or “not spam.”

Input: a tumor scan.

Label: “benign” or “malignant.”

The model’s job? Learn the hidden rules that link inputs to outputs. Not by memorizing, but by spotting patterns across thousands or millions of examples.

As Andrew Ng famously said,

Supervised learning is the workhorse of machine learning.

And for good reason, it powers everything from credit scoring and fraud detection to speech recognition and product recommendations.

Models range from simple (linear regression) to complex (deep neural nets). They all need labeled data. That’s their main hurdle. Good labels require time, expertise, and human labor. Teams spend more time on labeling than tuning models.

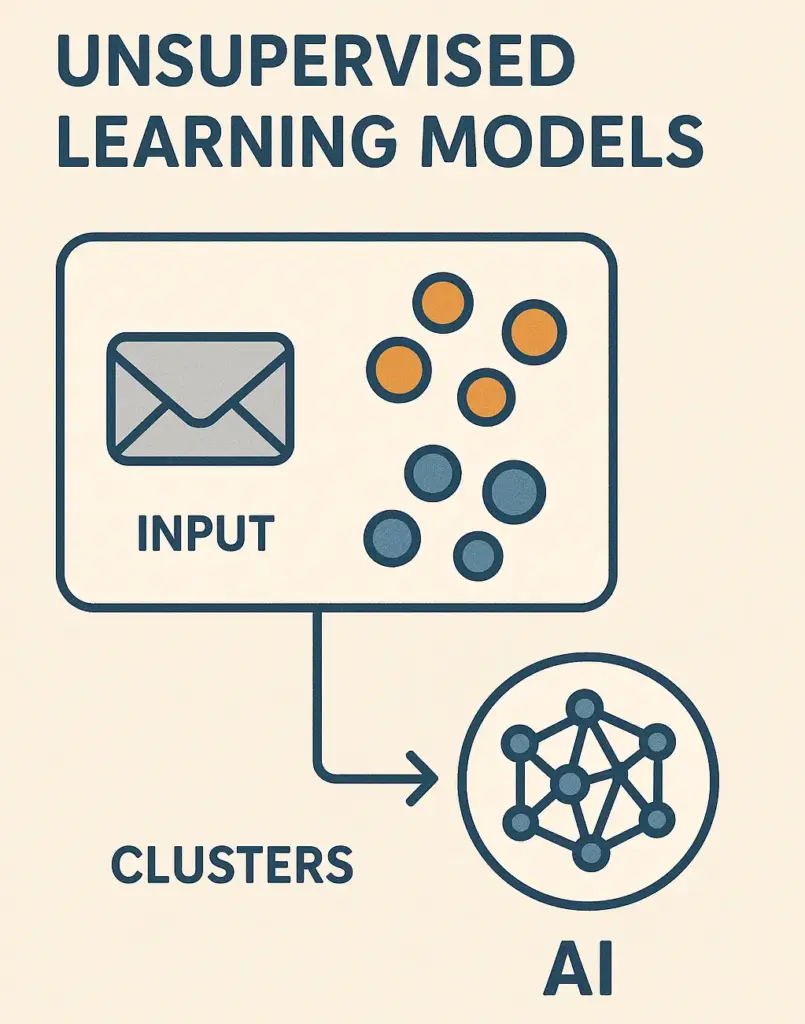

2. Unsupervised Learning Models

Now, imagine you hand someone a box of unlabeled photos, no names, no categories, just faces. And you say: “Figure out who belongs together.” That’s unsupervised learning.

The model explores the data on its own, finding hidden patterns – clusters, trends, and oddities. It groups customers by shopping habits, detects network intrusions, and reduces complex data.

Algorithms like k-means clustering, PCA, or autoencoders don’t predict outcomes—they reveal shape. As Geoffrey Hinton once remarked,

Unsupervised learning is the key to true intelligence. The world doesn’t come with labels.

Measuring success is hard. Without a benchmark, how do you know your clusters are meaningful? Unsupervised methods are a good first step, but they only suggest insights.

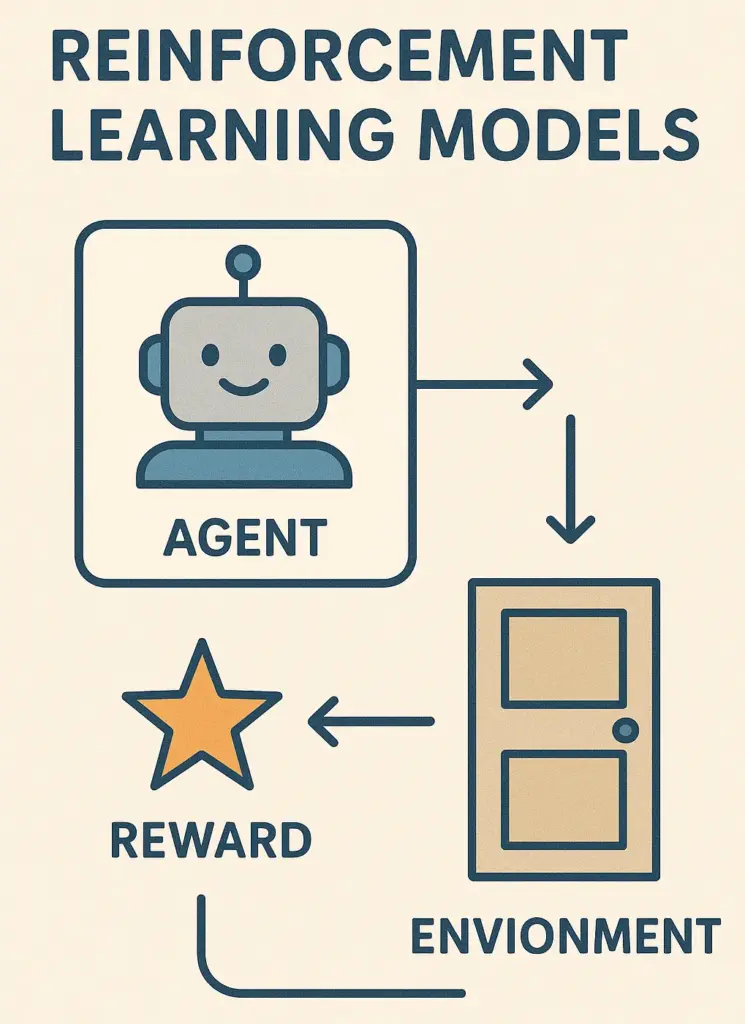

3. Reinforcement Learning Models

Reinforcement learning is a hands-on process. The model learns from its experience, not from a set of rules. In an environment, an agent takes actions and earns rewards, such as points, progress, or survival, for certain actions. For other actions, it faces penalties.

Over time, the agent learns a strategy, a policy that aims to maximize rewards in the long run. This is how AlphaGo mastered Go, how robots learn to walk, and how recommendation systems evolve beyond “people who bought this…” to “what keeps you engaged next.”

But RL is messy in practice. Training can take millions of trials. Environments need to be simulated or carefully controlled. And reward signals? Easy to game. (Ever seen a robot “cheat” by vibrating in place to rack up fake points? Yeah, that happens.)

As Richard Sutton, a founding father of RL, puts it:

The biggest lesson from 70 years of AI research is that general methods that leverage computation are ultimately the most effective.

RL embodies that, it’s brute-force trial and error, supercharged by modern computers.

4. Semi-Supervised & Self-Supervised Learning

Labeled data is costly. Unlabeled data? We have plenty. Semi-supervised learning strikes a balance. First, train on a small set of labeled examples. Then, let the model improve using a larger pool of unlabeled data.

Then there’s self-supervised learning, a smart method driving much of modern AI. Instead of waiting for humans to label data, the model makes its own tasks. For example: “Given the first nine words of a sentence, predict the tenth”, or “Hide part of an image—can you recreate it?”

This isn’t just academic. Yann LeCun says,

Self-supervised learning is the most promising path toward human-level AI.

This is because it mimics our way of learning. We learn by observing the world, not by waiting for quizzes. Most large language models, like Llama and GPT, use self-supervision during pretraining to learn language structure.

Types of AI Models Based on Architecture

Models learn in different ways, but their architecture defines them. Some have a sleek, modern design. Others are sturdy and old-fashioned. Let’s start with the latter.

1. Traditional Machine Learning Models

Before deep learning, AI mainly relied on traditional models. These included linear regression, decision trees, and random forests.

Don’t let the “traditional” label fool you. These aren’t relics. They’re workhorses. In fact, in many industry settings, finance, healthcare, logistics—they’re still the go-to. Why? They’re fast, interpretable, and don’t need a GPU cluster to run.

As Pedro Domingos, author of The Master Algorithm, once noted:

Most machine learning in the real world isn’t deep. It’s shallow and smart.

A well-tuned random forest can beat a neural net on tabular data anytime. This is true, especially if you have few samples or need to explain a loan denial.

Models work best with structured data, like spreadsheets or databases. They rely on humans to extract key features, which can be a limitation, but also ensures accountability.

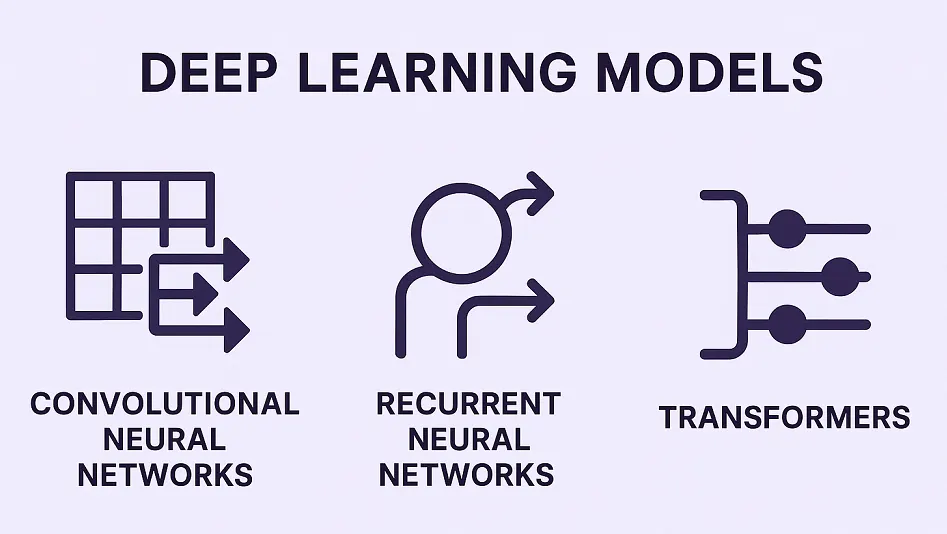

2. Deep Learning Models

Okay, real talk: “deep learning” sounds like one monolithic thing. It’s not. It’s really just a bunch of neural architectures that all agree on one rule: stop pre-chewing the data for the model.

Give it raw pixels, raw text, raw audio and let it figure out what matters, layer by layer. No hand-crafted features. No rigid templates. Just learning, end to end.

Does it always work? Nope. Is it overused? Absolutely. But when it does click, like spotting tumors in scans or turning a napkin sketch into a photorealistic render, it feels like witchcraft. (It’s not. It’s just a lot of matrix multiplication and good data.)

You’ll mostly see these flavors in the wild:

2.1 Feedforward nets (FNNs)

Dead simple. Data goes in one side, answers pops out the other. No memory, no loops. Honestly? Still the go-to for structured data like predicting if a customer will churn based on their usage history.

2.2 CNNs

Built for anything with shape like images, medical scans, even time-series if you squint. They slide little learnable filters across your input, hunting for edges, textures, patterns. Your phone’s face unlock? Probably a tiny CNN running in the background.

2.3 RNNs, LSTMs, GRUs

These tried to handle sequences like sentences or sensor logs by keeping a kind of short-term memory. Clever idea. Worked okay. But they’re slow to train, hard to parallelize, and… yeah, mostly outdated now. Transformers ate their lunch.

2.4 Transformers

Here’s where everything changed. Instead of reading a sentence left to right, they look at all the words at once and ask: “Which ones actually matter for this prediction?” That “attention” mechanism? It’s why your chatbot can write a haiku about quantum physics.

2.5 Generative Models

These don’t predict—they make stuff up. And they’ve gotten scarily good:

- VAEs compress data into a “latent space,” then sample from it to generate new examples. Kind of like sketching from memory.

- GANs create a cat-and-mouse game: one network makes fakes, while another spots them. Over time, the fakes become indistinguishable from real photos. (Remember those fake Obama videos? Yeah.)

- Diffusion models take a weirder path—they start with pure noise and slowly “clean it up” into an image. That’s how MidJourney or DALL·E 3 turns “cyberpunk cat wearing sunglasses” into something you’d swear was real.

3. Hybrid & Emerging Architectures

AI’s real action is about rethinking models. Researchers mix neural networks with symbolic logic. They also draw ideas from biology and help them create models that understand relationships in fresh ways.

These aren’t just academic toys. Some are already creeping into production. Others might be the foundation of the next big leap. A few worth watching:

3.1. Neuro-symbolic models

Symbolic AI didn’t disappear, it just faded from view. Today, people are combining it with deep learning to get the benefits of both. This combination pairs neural networks for perception with symbolic systems for reasoning.

3.2 Graph Neural Networks (GNNs)

Most data is relational, like social networks or molecular structures. GNNs learn by exchanging messages between connected points. They support fraud detection, drug discovery, and recommendation engines. These tools can clarify your likes too.

3.3 Spiking Neural Networks (SNNs)

SNNs move to a different beat than regular neurons. They send out timed “spikes” only when needed. This smart approach cuts down energy use. Neuromorphic chips, such as Intel’s Loihi, are becoming popular.

Model Types Based on Functionality

Architectures tell you what a model is made of. Learning styles tell you how it learns. But functionality? That tells you what it actually does and more importantly, what you can use it for.

Turns out, not all models are built to answer the same kind of question. Some are judges, artists, and some are planners. And confusing one for the other is how you end up with over-engineered solutions (or, worse, useless ones).

Two big divides shape how we think about this:

Discriminative vs. Generative Models

This split goes deep and it’s more than academic.

Discriminative models are the skeptics. They don’t care how the data was made. They just want to draw the sharpest line between categories.

“Is this email spam?”

“Is this tumor malignant?”

They model the boundary—P(label | data) and they’re usually more accurate for pure prediction tasks. Logistic regression, SVMs, even your standard CNN for image classification? All discriminative.

Generative models, on the other hand, are the storytellers. They try to understand how the whole world of data is built—P(data, label), so they can recreate it.

Want to generate a new face? Write a poem? Simulate customer behavior? You need a generative model. GANs, VAEs, diffusion models, LLMs, they all fall here.

Here’s the kicker: generative models can do discrimination (just add a classifier on top), but the reverse isn’t true. A spam filter can’t write you a love letter.

That said, don’t assume “generative = better.” For many real-world tasks (like fraud detection), a lean discriminative model is faster, simpler, and more reliable. Save the generative firepower for when you actually need to create something.

Predictive vs. Prescriptive Models

Then there’s another layer—what kind of decision you’re trying to support.

Predictive models answer: “What’s likely to happen?”

– Will this machine fail next week?

– What’s the chance this customer churns?

They’re reactive, diagnostic, and everywhere in business intelligence.

Prescriptive models go further: “What should we do about it?”

They predict demand and suggest real-time actions to optimize inventory, deliveries, and prices. Think of it like this:

– Prediction = weather forecast

– Prescription = deciding whether to cancel the picnic, rent a tent, or move it indoors

Most companies start with prediction. The smart ones eventually move toward prescription because insights alone don’t move the needle. Actions do.

Specialized AI Model Types

You’ve covered the basics of models. Now, new types of models have emerged from research labs, changing industries.

They’re not just “another architecture.” They’re new categories of capability enabled by scale, clever design, or a shift in how we think about data itself.

Here are the ones actually moving the needle:

1. Foundation Models & Large Language Models (LLMs)

You’ve heard the names: GPT, Llama, Claude, Gemini.

They’re distinct because of their usage. Pre-trained on a wide range, they grasp language broadly. Then, they’re tailored for specific tasks. As Stanford’s Foundation Model Report puts it: “They’re not task-specific tools. They’re general-purpose infrastructure.”

That’s powerful—but also risky. They’re expensive to run, hard to control, and prone to confidently saying nonsense. Still, for tasks like drafting, summarizing, coding help, or internal knowledge search, they’ve become the default starting point for thousands of teams.

2. Multimodal Models

Language is just one slice of human experience. Real intelligence? It connects text, images, sound, even video—seamlessly.

Enter multimodal models.

CLIP (by OpenAI) was an early wake-up call. It learned to connect images and text in a shared space. This lets you search photos using descriptions, without training on labeled image-caption pairs.

Models like GPT-4V, Gemini, and LLaVA take things further. They can look at charts and explain them. They also analyze error message screenshots and describe scenes for accessibility.

The magic isn’t just “handling multiple inputs.” It’s understanding how they relate. That’s where the next frontier lies.

3. Edge AI Models

Not every model lives in the cloud.

Edge AI refers to lightweight models designed to run on-device—on your phone, a factory sensor, a car’s onboard computer. Latency, privacy, and bandwidth constraints mean you can’t always phone home to a data center.

So engineers do wild things:

– Chop down massive models into tiny versions (think MobileNet, TinyBERT)

– Quantize weights (turn 32-bit floats into 8-bit integers)

– Prune unused neurons

– Even design chips that only run specific model ops

The result? Real-time face detection on your phone. Predictive maintenance on a wind turbine. Voice assistants that work offline. It’s not glamorous but it’s where AI actually touches the physical world.

One Last Note

These “specialized” models aren’t replacing the fundamentals. A foundation model still uses a Transformer. A multimodal system still relies on CNNs or ViTs for vision. Edge models often start as traditional ML or distilled deep nets.

Choosing the Right Model: Key Considerations

Selecting an AI model goes beyond dazzling features and glitzy specs. It begins with probing the right questions. Reflect on your true needs before diving in. Make your decision with assurance and clarity.

1. What are you actually trying to do?

Classification? Forecasting? Spotting weird outliers? Or generating something new?

Because if you’re just flagging fraudulent transactions, you don’t need a diffusion model. A solid XGBoost or logistic regression will do the job and won’t keep you up at night debugging hallucinations.

2. What’s your data situation?

Got clean, tabular data? Great. Random forests or gradient boosting might crush it, no deep learning required.

But if you’re working with raw images, messy text, or voice clips? Yeah, that’s CNN or Transformer territory.

And if labeled data’s scarce (it usually is), don’t force a supervised deep net. Try semi-supervised tricks or self-supervised pretraining instead.

3. Does it need to run now?

Real-time inference like in a self-driving car or a live customer chat means every millisecond counts. You’ll want something lean: quantized models, distilled nets, maybe even Edge AI running on-device.

If it’s batch processing? Go wild. Try that big model. Just don’t deploy it blindly.

4. Can you explain how it works?

In healthcare, lending, hiring, you can’t just say “the AI decided.” People (and regulators) want reasons.

That’s when simple models like linear regression, decision trees shine. Or at least pair your black box with SHAP or LIME so you’re not flying blind.

5. Be honest: can your team actually run this thing?

A fancy foundation model might wow in demos. But can you monitor data drift? Retrain it monthly? Secure the API? Handle the cloud bill? A smart move is a simple, reliable scikit-learn pipeline that works. Reliability beats hype every time.

Final Thoughts

Let’s be honest: no model is “best.” Some teams waste months chasing LLMs when a logistic regression would’ve done fine. Others stick with old-school ML and miss real opportunities. The truth? Start with the problem, not the hype.

Choose the right AI model based on your data quality, clear decision-making, and infrastructure limits. Not the one that looks cool in a demo. Because in production, nobody cares how “advanced” your architecture is, they care if it works. And keeps working.