Your morning alarm woke you during a light sleep phase, tracked by AI. Your map app routed you around traffic using real-time predictions. A streaming service suggested music that matched your mood. An HR algorithm may have screened your job application anonymously.

Artificial Intelligence is no longer sci-fi. It’s in your daily life, making recommendations and decisions. But AI has no values, only what we teach it. If we’re biased, secretive, or careless, that’s what it learns.

That’s why ethical AI isn’t just a debate for engineers in lab coats. It’s a human issue. Because when algorithms start deciding who gets a loan, who sees a job ad, or whose medical scan gets flagged first, fairness isn’t a nice-to-have. It’s oxygen.

Key Takeaways

1. AI impacts your daily life, often without you noticing.

2. Ethical AI means fair, clear, and safe technology designed for people.

3. Poor data and weak oversight lead to biased or harmful results.

4. You can challenge this: ask how decisions are made.

5. Good AI ethics benefit everyone, not just tech firms.

What Is Ethical AI? (It’s Not About Robots Having Morals)

Ethical AI isn’t about building machines that feel guilty. It’s about building systems that don’t harm, exclude, or deceive people, whether intentionally or not.

At its core, ethical AI means creating and using artificial intelligence that reflects human values. Imagine teaching a smart, literal child what is right and wrong. You do this only through the examples you provide and the rules you set.

Here are the five pillars everyone should know:

1. Fairness: AI shouldn’t favor one group over another.

Example: An AI hiring tool trained mostly on resumes from men might downgrade applications from women, not because it “hates” women, but because it learned patterns skewed by historical inequality.

2. Transparency: You should know why an AI decided for you.

If your loan gets denied by an algorithm, you deserve more than “computer says no.” You deserve to know which factors mattered most.

3. Accountability: When AI messes up, someone must answer for it.

If a self-driving car causes a fatal crash, is it the programmer? The company? The data supplier? Ethical AI demands clear responsibility chains.

4. Privacy: Just because data can be collected doesn’t mean it should.

That fitness app tracking your heart rate? It might sell insights to insurers. Ethical AI respects boundaries and consent.

5. Safety & Reliability: AI should work as intended without unexpected harm.

A medical diagnostic tool must be rigorously tested. An AI chatbot shouldn’t give dangerous mental health advice just because it “sounded confident.”

Real talk: Ethical AI isn’t about perfection. It’s about intentional effort to minimize harm and maximize dignity.

Why Ethics in AI Isn’t Optional—It’s Survival

Let’s play a quick game: Name three ways AI has personally impacted you this week. Chances are, you listed:

- Spotify’s playlist

- Google Maps avoiding construction

- Instagram’s eerily accurate ad for those hiking boots you just texted about

Now, flip it: How could those same tools hurt you? That “perfect” job ad? It might only show roles to men aged 25–40 because the algorithm learned that demographic converts best.

That “helpful” health chatbot? It might misdiagnose symptoms common in darker skin tones because its training data was 80% light-skinned patients.

That neighborhood crime-prediction tool? It could flood police patrols into Black communities simply because arrest data from biased policing was used to train it.

Amazon scrapped an AI recruiting tool after discovering it penalized resumes containing the word “women’s” (like “women’s chess club captain”).

Facial recognition systems from major vendors misidentified people of color at rates up to 35% higher than white faces (MIT Media Lab, 2018).

Unethical AI amplifies biases, destroying privacy and trust. But ethical AI can bring people together.

- Consumers get fairer prices, safer products, and respectful service.

- Businesses reduce legal risk, build brand trust, and tap into broader markets.

- Society gains tools that heal divides instead of deepening them.

The 4 Big Ethical Headaches Plaguing AI Today

1. Bias: When AI Inherits Our Prejudices

AI learns from data. If that data reflects historical discrimination—say, mortgage approvals skewed against certain ZIP codes, the AI “learns” that discrimination is normal.

Take healthcare algorithms. A 2019 study in Science found an AI used by U.S. hospitals to prioritize care flagged white patients over sicker Black patients. Why? It used past healthcare spending as a proxy for need. But systemic inequities meant Black patients spent less despite being sicker. The AI saw “spent less” = “lower need.” Garbage in, gospel out.

2. The Black Box Problem: “Trust Me, I’m an Algorithm”

Many powerful AI models (like deep neural nets) are black boxes, even their creators can’t fully explain why they made a specific decision.

Imagine being denied a mortgage. You ask why. The bank says, “Our model scored you 62.7%.” Not helpful. Not legal in many jurisdictions. The EU’s GDPR grants a “right to explanation.” But try explaining how a 175-billion-parameter model “felt” about your credit history.

3. Accountability: Who Takes the Fall When AI Fails?

If an AI medical scanner misses a tumor, who’s liable? The hospital? The software vendor? The data annotator who missed a critical label? The model trainer?

Current legal frameworks weren’t built for shared, diffuse responsibility. When harm is algorithmic, blame gets algorithmic too, vanishing into layers of outsourcing and licensing agreements.

4. Privacy: The Quiet Trade of Your Life for Convenience

You trade data daily for free apps, personalized ads, and faster service. But what’s the cost?

- Emotion tracking AI analyzes your facial micro-expressions during job interviews.

- Smart home devices listen for “wake words”… but sometimes catch a lot more.

- Social scoring systems (like China’s controversial models) combine behavior, spending, and social ties to assign “trustworthiness.”

We’re sleepwalking into surveillance capitalism. Ethical AI insists on minimal data collection, meaningful consent (not 50-page terms no one reads), and user control. You should be able to say:

Delete my data. Stop profiling me. Show me what you know.

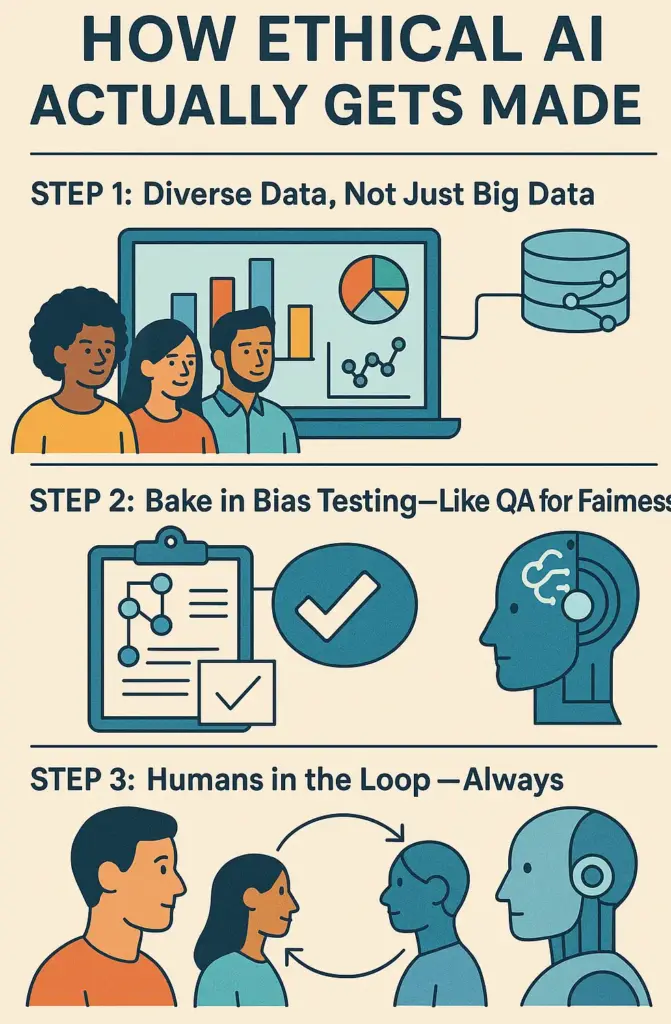

How Ethical AI Actually Gets Made

It’s tempting to think ethics is just a PR sticker slapped on AI after launch. But real ethical AI starts before code is written.

Step 1: Diverse Data, Not Just Big Data

Big datasets sound impressive. But if your training images are 90% light-skinned faces, your facial recognition will fail darker skin tones. Period.

Solution? Intentional data curation.

- Include underrepresented groups not just in volume, but in nuance (e.g., dialect diversity in voice AI).

- Audit datasets for historical skews. If women were underrepresented in tech hiring for 20 years, don’t train on that era alone.

- Use synthetic data carefully to fill gaps but never as a band-aid over real-world exclusion.

Step 2: Bake in Bias Testing—Like QA for Fairness

Good engineers test for crashes. Ethical engineers test for discrimination.

Tools like AI Fairness 360 (IBM) or Fairlearn (Microsoft) let developers run bias checks across gender, race, age, and more. They ask:

- Does this loan model approve Black applicants at half the rate of white applicants with similar profiles?

- Does this resume screener downgrade “female-coded” words (nurse, coordinator) while favoring “male-coded” ones (leader, rockstar)?

Step 3: Humans in the Loop—Always

No AI should make high-stakes decisions alone.

Medical AI? Flag findings for radiologist review.

Parole algorithms? Judges must see the reasoning and override it.

Content moderation? Humans review edge cases AI flags as “toxic.”

Multidisciplinary teams, including ethicists, sociologists, lawyers, community advocates, working alongside engineers change the DNA of a product. They ask:

Who might this hurt? Who’s missing from the table?

Step 5: Global Standards Are Emerging (Finally)

From the EU’s AI Act classifying risk levels (banning social scoring, regulating facial recognition) to the U.S. Blueprint for an AI Bill of Rights, regulation is catching up.

China’s rules require algorithms to be “fair, transparent, and accountable.” Canada mandates algorithmic impact assessments. Brazil’s LGPD gives teeth to data rights.

This patchwork is messy but necessary. Like seatbelts in cars, ethics in AI won’t stifle innovation. It’ll make it sustainable.

You’re Not Powerless—Here’s How to Push Back (and Forward)

You don’t need a PhD in machine learning to shape ethical AI. You vote with attention, clicks, complaints, and your voice.

- Ask “Why?” and “How?”

- Audit Your Own Digital Diet

- Support Brands Doing It Right

- Learn Just Enough to Be Dangerous (In a Good Way)

- Demand Policy That Protects People, Not Just Profits

FAQs- Ethical AI

What exactly is “ethical AI”?

Ethical AI means using smart tech fairly and safely, respecting people’s privacy. It’s about humans making choices to keep people safe and treated fairly.

Why should I care about ethical AI?

AI affects hiring, lending, and medical care. Biased or secretive AI can cause harm. Ethical AI prevents this and builds trust.

How does AI become biased in the first place?

AI learns from data, so if the data is biased, AI will be too.

Who’s responsible when an AI messes up?

Developers, companies, and regulators must take responsibility. “The algorithm did it” is no excuse.

Do laws protect people from bad AI?

GDPR exists in Europe, while privacy laws operate in Brazil. AI rules are emerging in the U.S., China, and Canada.

What can I do if I’m worried about an AI decision that affected me?

Ask why decisions were made and what data was used. Check app permissions and choose transparent companies. Push for stronger rules.

Can ethical AI still be good for businesses?

AI ethics reduce legal risk, build trust, and improve products. It’s a long-term win.

Conclusion: We Don’t Need Perfect AI. We Need Human-Centered AI.

Ethical AI is about accountability when mistakes happen. It reflects our flaws, but also our aspirations for fairness, clarity, and respect.

This isn’t just on Elon, Zuckerberg, or Sundar Pichai. It’s on teachers using AI grading tools. Doctors trusting diagnostic algorithms. Parents whose kids’ toys listen and learn. Voters targeted by political micro-messaging. Consumers seduced by convenience.

In ethical AI, people must pay attention and ask tough questions. When an app knows what you want, think about how it learned that. Decide if you’re okay with how it was built. The future of AI is determined by our choices.